Tesla prevails in first Autopilot wrongful-death suit

A jury has found Tesla not at fault in a lawsuit over a 2019 wrongful death which alleged that Autopilot caused a crash, killing one passenger and seriously injuring two.

In question was the death of 37-year-old Micah Lee, who was driving a Model 3 in 2019 in Menifee, CA (in the Inland Empire to the east of Los Angeles), and hit a palm tree at approximately 65 miles per hour, causing his death and the injury of two passengers, including an 8-year-old boy. The lawsuit was brought by the passengers.

The lawsuit alleged that Tesla knowingly marketed unsafe experimental software to the public, and that safety defects within the system led to the crash (in particular, a specific steering issue that was known by Tesla). Tesla responded that the driver had consumed alcohol (the driver’s blood alcohol level was at .05%, below California’s .08% legal limit) and that the driver is still responsible for driving when Autopilot is turned on.

A survivor in the vehicle at the time of the accident claimed that Autopilot was turned on at the time of the crash.

Tesla disputed this, saying it was unclear whether Autopilot was turned on – a difference from its typical modus operandi, which involves pulling vehicle logs and stating definitively whether and when Autopilot was on or off. Though these claims have sometimes been lodged when Autopilot was disengaged moments before a crash, when avoidance was no longer possible for the driver.

After four days of deliberations, the jury decided in Tesla’s favor, with a 9-3 decision that Tesla was not culpable.

While Tesla has won an autopilot injury lawsuit before, in April of this year, this is the first resolved lawsuit that has involved a death. That last lawsuit used the same reasoning – that drivers are still responsible for what happens behind the wheel while Autopilot or Full Self-Driving are engaged (despite the name of the latter system suggesting otherwise). Full Self-Driving was not publicly available at the time of Lee’s crash, though he had purchased the system for $6,000 expecting it to be available in the future.

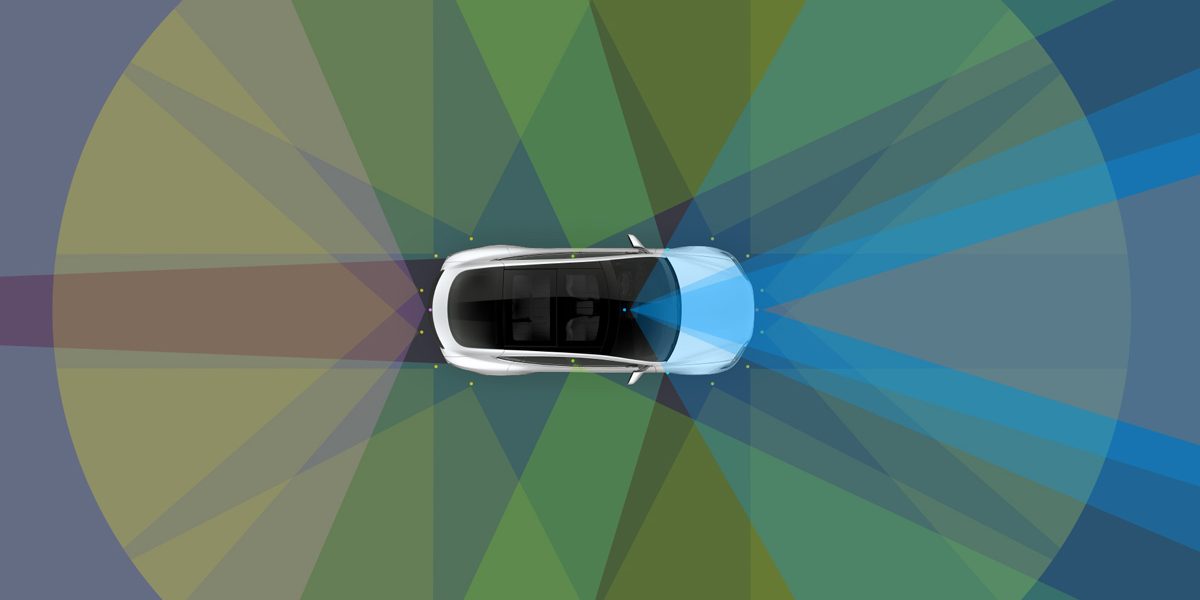

Both of Tesla’s autonomous systems are “level 2” on the SAE’s driving automation scale, like most other new autonomous driving systems on the market these days. Although Autopilot is intended for highway use, Tesla’s FSD system can be activated in more situations than most cars. But there is no point at which the car assumed responsibility for driving – that responsibility always lies with the driver.

Since the trial began last month, Tesla CEO Elon Musk made a notable comment during his disastrous presence on Tesla’s Q3 conference call. He was asked whether and when Tesla would accept legal liability for autonomous drive systems, as Mercedes has just started doing with its Level 3 DRIVE PILOT system, the first of its kind in the US (read about our test drive of it in LA). Musk responded saying:

Well, there’s a lot of people that assume we have legal liability judging by the lawsuits. We’re certainly not being let that off the hook on that front, whether we’d like to or wouldn’t like to.

Elon Musk, CEO, Tesla

Later in the answer, Musk called Tesla’s AI systems “baby AGI.” AGI is an acronym for “artificial general intelligence,” which is a theorized technology for when computers become good enough at all tasks to be able to replace a human in basically any situation, not just in specialized situations. In short, it’s not what Tesla has and has nothing to do with the question.

Tesla is indeed currently facing several lawsuits over injuries and deaths that have happened in its vehicles, many alleging that Autopilot or FSD are responsible. In one, Tesla tried to argue in court that Musk’s recorded statements on self-driving “might have been deep fakes.”

We also learned recently, at the release of Musk’s biography, that he wanted to use Tesla’s in-car camera to spy on drivers and win autopilot lawsuits. Though that was apparently not necessary in this case.

City Dwellers’s Take

Questions like the one asked in this trial are interesting and difficult to answer, because they combine the concepts of legal liability, versus marketing materials, versus public perception.

Tesla is quite clear in official communications, like in operating manuals, in the car’s software itself, and so on, that drivers are still responsible for the vehicle when using Autopilot. Drivers accept agreements as such when first turning on the system.

Or at least, I think they do, since the first time I accepted it was so long ago. And that is the rub. People are also used to accepting long agreements whenever they turn on any system or use any piece of technology, and nobody reads those. Sometimes, these terms even include legally unenforceable provisions, depending on the venue in question.

And then, in terms of public perception, marketing, and in how Tesla has deliberately named the system, there is a view that Tesla’s cars really can drive themselves. Here’s Tesla explicitly saying “the car is driving itself” in 2016.

We here at City Dwellers, and our readership, know the difference between all of these concepts. We know that “Full Self-Driving” was (supposedly) named that way so that people can buy it ahead of time and eventually get access to the system when it finally reaches full self-driving capability (which should happen, uh, “next year”… in any given year). We know that “Autopilot” is meant to be a reference to how it works in airplanes, where a pilot is still required in the seat to take care of tasks other than cruising steadily. We know that Tesla only has a level 2 system, and that drivers still accept legal responsibility.

But when the general public gets a hold of technology, they tend to do things that you didn’t expect. That’s why caution is generally favorable when releasing experimental things to the public (and, early on, Tesla used to do this – giving early access to new Autopilot/FSD features to trusted beta testers, before wide release).

Despite being told before activating the software, and reminded often while the software is on, that the driver must keep their hands on the wheel, we all know that drivers don’t do that. That drivers pay less attention when the system is activated than when it isn’t. Studies have shown this, as well.

And so, while the jury found (probably correctly) that Tesla is not liable here, and while this is perhaps a good reminder to all Tesla drivers to keep paying attention to the road while you have Autopilot/FSD on, you are still driving, so act like it, we still think there is room for discussion about Tesla doing a better job of ensuring attention (for example, it just rolled out a driver attention monitoring feature using the cabin camera, six years after it started including those cameras in the Model 3).

FTC: We use income earning auto affiliate links. More.